Writings > Font scaling can bite my ass.

20 Aug 2003

Update, March 2004

In writing a document or building an image, you probably set the font size in terms of pt, or points. Point is a typesetting term representing 1/72 of an inch — 0.065277778cm for the metrically-inclined. Graphics systems work in terms of pixels, which has an indeteriminate size in the real world. It is simply the smallest unit the screen could plot.

Because of this, the DPI (dots-per-inch) measurements were made. It was concieved as a way to scale point-sized glyphs to the screen, to make them appear close to their paper equivilant. The problem is, different systems use different DPI scalings.

DPI scales for some systems

| Apple Mac OS: | 72dpi |

| X Window System (default): | 75dpi |

| Microsoft Windows (small): | 96dpi |

| Microsoft Windows (large): | 120dpi |

If you only work with one system (and everything you come in contact with uses it as well), you'll never notice this problem. When you intertwingle these systems, this can (and will) arise. The Internet is a prime example here. Since Microsoft basically owns the world, most people use smaller sizes (a higher DPI will make fonts look bigger1)

Font scaling across different platforms

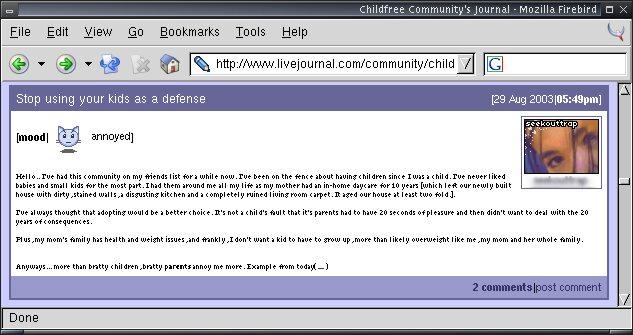

X Window System

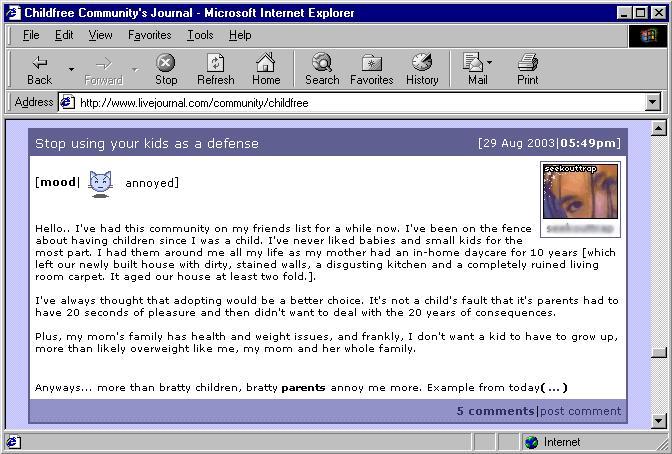

Microsoft Windows

(Of course, I do notice the difference in browsers. Mozilla Firebird for Windows would be more fair, but the machine on which the shot was taken only had 8MB of RAM.)

Quite honestly, even if everyone were to standardise to a single DPI setting (most logically 72dpi, but more probably 96dpi), it'd still be wrong. Why? Using an across the board setting ensures that nothing will ever render correctly, unless the screen on which it is viewed actually has DPI pixels-per-inch. The correlation between DPI and actual pixels-per-inch varies between screens and varies on each different screen resolution.

The proper solution is to set the DPI correctly for each resolution/screen. Mozilla Firebird will do this just for the browser: it shows you a line, you measure it and input it. It then calculates the proper DPI (1600x1200 on my 21-inch monitor is roughly 112dpi). Problem is, it doesn't seem to affect the font sizes properly.

It really couldn't be done in pure code, because you'd never have enough information. It requires the ruler approach.2 That solution won't work across the board, for one reason: people are too fuckin' lazy to setup their computers. A ruler would bloody confuse them.

1: pixels = (points * dots-per-inch) / 72 [to integer precision]

2: Yes, I have thought of pure-code methods, but none of them would work without user input (monitor size), because I'm talking about using existing systems. Even then, it's a really rough estimate.